Table of contents

- Introduction:

- What is Kubernetes and why is it important?

- What is the difference between Docker Swarm and Kubernetes?

- How does Kubernetes handle network communication between containers?

- How does Kubernetes handle scaling of applications?

- What is a Kubernetes Deployment, and how does it differ from a ReplicaSet?

- Can you explain the concept of rolling updates in Kubernetes?

- How does Kubernetes handle network security and access control?

- Can you give an example of how Kubernetes can be used to deploy a highly available application?

- What is a namespace in Kubernetes?

- How does Ingress help in Kubernetes?

- Explain different types of services in Kubernetes. Kubernetes offers different types of services to enable communication and access to applications. The types include:

- Can you explain the concept of self-healing in Kubernetes and give examples of how it works?

- How does Kubernetes handle storage management for containers?

- How does the NodePort service work?

- What is a multinode cluster and a single-node cluster in Kubernetes?

- Difference between creating and apply in Kubernetes?

- Conclusion:

Introduction:

In recent years, Kubernetes has emerged as a leading container orchestration platform, revolutionizing the way applications are deployed and managed. As a DevOps engineer, it is crucial to have a deep understanding of Kubernetes to excel in the field. In this blog, we will explore and answer some common Kubernetes interview questions, shedding light on its significance and key concepts. Let's dive in!

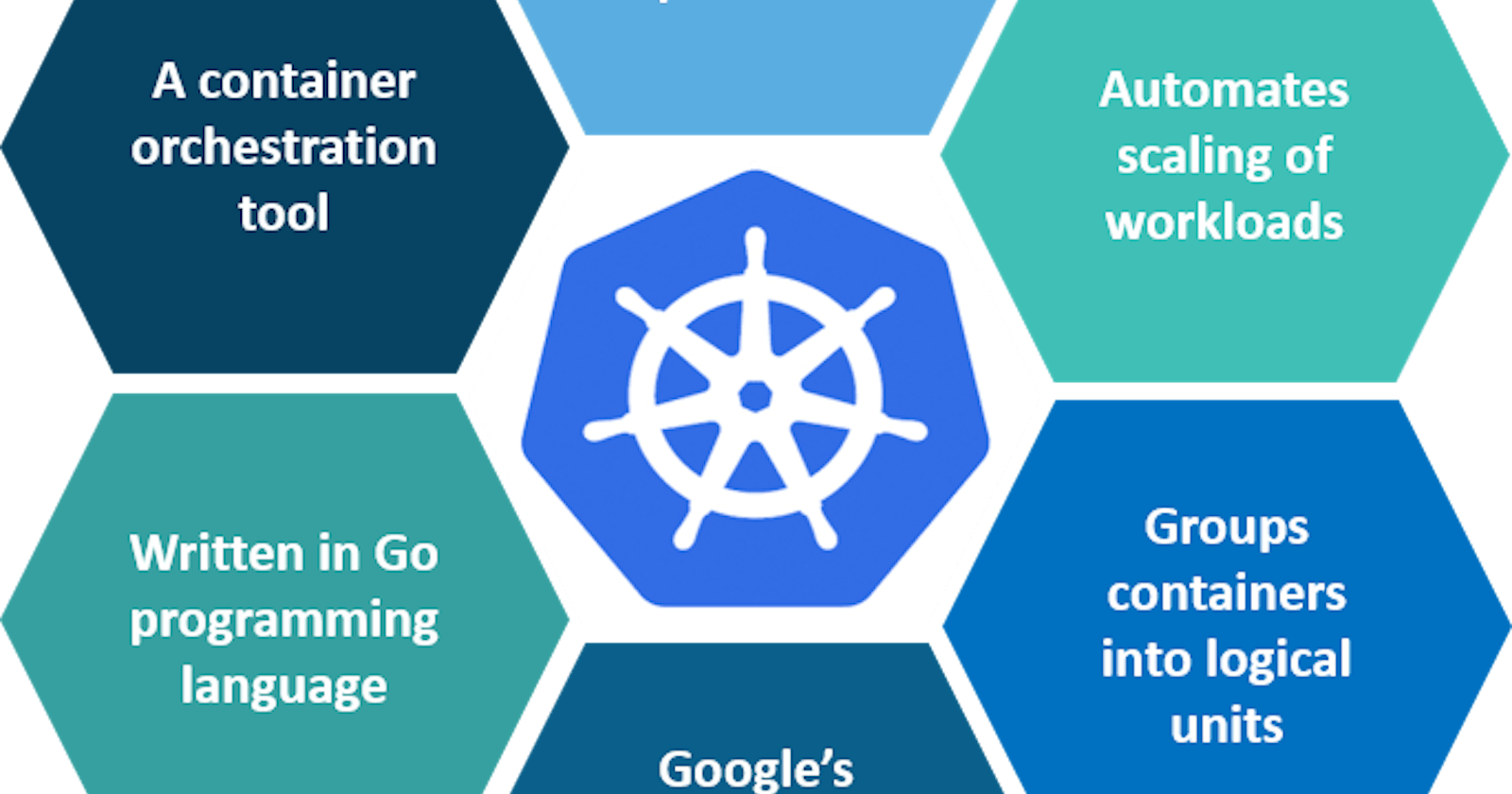

What is Kubernetes and why is it important?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides a robust and scalable infrastructure to manage complex container environments efficiently. Kubernetes is essential because it simplifies application deployment, enhances scalability, ensures high availability, and enables efficient resource utilization.

What is the difference between Docker Swarm and Kubernetes?

Docker Swarm and Kubernetes are both container orchestration platforms, but they have some key differences. Docker Swarm is a native clustering and orchestration solution provided by Docker, while Kubernetes is a more comprehensive and feature-rich platform supported by the Cloud Native Computing Foundation (CNCF). Kubernetes offers advanced features like automatic scaling, self-healing, service discovery, and extensive ecosystem support, making it more suitable for large-scale and production-grade deployments.

How does Kubernetes handle network communication between containers?

Kubernetes employs a flat networking model using a virtual network overlay. Each container in a Kubernetes cluster is assigned a unique IP address within the cluster's network. Kubernetes leverages a built-in networking solution like Kubernetes CNI or third-party plugins to facilitate network communication between containers. These solutions create a virtual network that connects containers across nodes, allowing seamless communication using IP addressing.

How does Kubernetes handle scaling of applications?

Kubernetes provides two scaling mechanisms: horizontal scaling and vertical scaling. Horizontal scaling, also known as pod autoscaling, dynamically adjusts the number of running pods based on CPU utilization or custom metrics. Vertical scaling, on the other hand, involves resizing individual pods by adjusting their resource limits. Kubernetes also supports cluster autoscaling, which adds or removes nodes from the cluster based on resource demands.

What is a Kubernetes Deployment, and how does it differ from a ReplicaSet?

A Kubernetes Deployment is a higher-level abstraction that manages the lifecycle of pods and provides declarative updates to application replicas. It ensures that the desired number of pods are running and handles scaling, rolling updates, and rollbacks. A ReplicaSet, on the other hand, is a lower-level object that ensures a specified number of pod replicas are running at any given time. Deployments use ReplicaSets internally to manage pod replicas, making deployments more powerful and flexible.

Can you explain the concept of rolling updates in Kubernetes?

Rolling updates are a feature of Kubernetes Deployments that enable seamless updates to applications without downtime. During a rolling update, new pod replicas are gradually created while old replicas are terminated in a controlled manner. This ensures that the application remains available throughout the update process. Rolling updates provide the ability to rollback to a previous version if any issues arise during the update, ensuring application reliability.

How does Kubernetes handle network security and access control?

Kubernetes implements network security and access control through its network policies and authentication/authorization mechanisms. Network policies allow fine-grained control over inbound and outbound network traffic between pods, ensuring secure communication within the cluster. Authentication and authorization are managed through the Kubernetes API server, which supports various authentication methods, including certificates, tokens, and external identity providers. Role-Based Access Control (RBAC) is used to define granular access permissions for users and service accounts.

Can you give an example of how Kubernetes can be used to deploy a highly available application?

Sure! Let's consider deploying a web application. Kubernetes ensures high availability by creating multiple replicas of the application pods and distributing them across different nodes in the cluster. It employs load balancing to distribute incoming traffic across these replicas, ensuring that even if one pod or node fails, the application remains accessible. Additionally, Kubernetes provides health checks and automated pod recovery, ensuring continuous availability of the application.

What is a namespace in Kubernetes?

Which namespace does any pod take if we don't specify any namespace? A namespace in Kubernetes is a logical boundary that allows for the segregation and organization of resources within a cluster. It provides isolation and a scope for naming uniqueness. If no namespace is specified for a pod, it is created in the "default" namespace by default. The "default" namespace is the primary namespace for most Kubernetes objects unless explicitly specified otherwise.

How does Ingress help in Kubernetes?

Ingress is an API object in Kubernetes that manages external access to services within the cluster. It acts as an entry point and provides HTTP and HTTPS routing capabilities. Ingress routes incoming requests to the appropriate services based on defined rules and configurations. It allows for more advanced load balancing, SSL termination, and routing features compared to basic service configurations, making it essential for exposing applications to the outside world.

Explain different types of services in Kubernetes. Kubernetes offers different types of services to enable communication and access to applications. The types include:

ClusterIP: Exposes the service on an internal IP address, accessible only within the cluster.

NodePort: Exposes the service on a static port on each node's IP address, allowing external access to the service.

LoadBalancer: Provides a load balancer service using an external cloud provider's load balancer integration.

ExternalName: Maps the service to an external DNS name, enabling access to services outside the cluster.

Can you explain the concept of self-healing in Kubernetes and give examples of how it works?

Self-healing is a fundamental characteristic of Kubernetes. It ensures that containers and pods are automatically restarted or rescheduled in case of failures or crashes. Kubernetes monitors the health of pods and takes corrective actions if any pod becomes unhealthy. For example, if a pod fails its health check or becomes unresponsive, Kubernetes automatically terminates it and creates a new replica to maintain the desired state.

How does Kubernetes handle storage management for containers?

Kubernetes manages storage through Persistent Volumes (PV) and Persistent Volume Claims (PVC). PVs abstract the underlying storage details, while PVCs request specific storage resources from PVs. Kubernetes dynamically provisions PVs and binds them to PVCs based on storage classes and policies defined in the cluster. This ensures that containers can access persistent storage and retain their data even after pod restarts or rescheduling.

How does the NodePort service work?

The NodePort service type exposes the service on a static port on each node in the cluster. It allows external access to the service by forwarding incoming requests from each node's IP address and the specified static port to the service's target port. NodePort is commonly used for development and testing scenarios but is not recommended for production deployments due to security reasons.

What is a multinode cluster and a single-node cluster in Kubernetes?

A multimode cluster refers to a Kubernetes cluster with multiple worker nodes, each running containerized applications and services. It offers scalability, high availability, and resource distribution across nodes. On the other hand, a single-node cluster is a Kubernetes setup running on a single machine. It is typically used for development, testing, or learning purposes and does not provide the fault tolerance and resilience of a multinode cluster.

Difference between creating and apply in Kubernetes?

In Kubernetes, "create" and "apply" are two different ways to manage resources using YAML files. The "create" command is used to create new resources in the cluster from the YAML file. If the resource already exists, "create" will fail. On the other hand, the "apply" command is used to manage resources by creating or updating them based on the YAML file. If the resource exists, "apply" will update it, and if it doesn't exist, it will create it.

Conclusion:

Understanding Kubernetes is crucial for DevOps engineers to effectively manage containerized environments and orchestrate applications at scale. In this blog, we delved into common Kubernetes interview questions, exploring its significance, networking, scaling, and self-healing capabilities. We also covered concepts like deployments, services, and data persistence. Armed with this knowledge, you can confidently tackle Kubernetes-related challenges and drive innovation in the world of container orchestration. Happy Kubernetes learning!

To connect with me - https://www.linkedin.com/in/subhodey/